Comixology - Digital Comics Analysis (Part 1 - analysis of site for web scraping)

May 01, 2016

Introduction

In this post, i’ll talk about a project that I recently decided to do, involving an analysis of the digital comics market. I like the subject for quite some time and I wanted to have a general idea of the comics and publishers (specially the giants, Marvel and DC Comics).

Since I had already used Comixology for comic reading, I opted to make an analysis of it. Comixology is a website that sells digital comics, with a huge catalog and a lot of publishers, big and small, and even independent ones. If you want to check it out: www.comixology.com

Since Comixology does not have an API and since there is no Dataset to download or anything like that, I had to do web scraping. What is web scraping? Web Scraping is the act of extracting information from structured HTML documents (websites). Basically, you access a web address (programatically) and, from the HTML source code, extract the information you want, through the identification of patterns and where in the code the information is. With modern languages and frameworks, it is always plausible to believe that there is a structure to the pages, patterns and repeatable structures that can be reproduced in your code. This is one good lesson: in Data Analysis, sometimes, the data is not available in an easy and accessible way, so it is necessary to develop another means to get it.

To do our scraping, we will use two Python libraries that are installed with Anaconda. They are lxml (so you can parse code in HTML, XML and others), together with Xpath (Xpath is not a Python library, but a syntax to define parts in a XML document, and we will talk more about it later), and requests, used to make (guess what) requests in HTML pages and return the source code (among other things).

This post will start a series of 3 posts, where each one will talk about a part of the project. In the first one, I will talk about the analysis that I made to understand its structure and how could I prepare myself to write the scraping code. In the second post, I’ll talk about the scraping code itself, explaining each part of it. And finally, in the third one, I will do some exploratory analysis about the data we just scraped (expect lots of tables and charts).

Let’s start the first post, the analysis of the website and the preparation for the scraping.

The Website

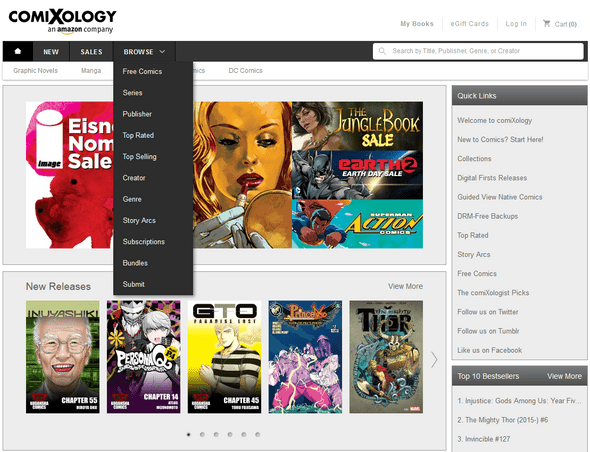

Well, my first step, as I already talked about, was to evaluate the website itselft, so I could understand how I’d make the scraping. It is necessary to understand the structure, where the information is, through where you will reach it. The best scenario would be to have some place in the website where all the links to the comics were together, or at least connected in some way. So I went to the to the “Browse” menu, the seemingly obvious option:

From top to bottom, “Free Comics” did not seemed to be a good option, because it would obviously limit me to comics that were free, which is probability a minority of the total. “Series” could be an option, but I was not sure if every comic in the website was part of a series, so I put it aside for a moment. The next option “Publishers” looked promising, because I thought it was plausible to assume that every comic would be related to a Publisher, even if it was an independent one. Following, “Top Rated” and “Top Selling” were also discarded as options, since both would limit the number of comics. The two following options also seemed good, “Creator” and “Genre”, since it was also plausible to think that any comic is related to a creator and a genre. In the end, I opted to got with the “Publishers”, so I went to analyze it.

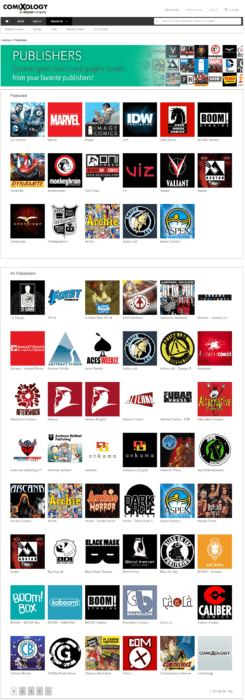

As we can see, this page is divided in two parts. The first one has a table of “Featured” publishers. Again, if my objective was to analyze the entire website, this table is limiting. But, on the second part, there is a “All Publishers” table. There it is. We will continue from this table.

This table is divided in pages. It shows 48 publishers per page, with the exception being the last page, where the quantity can be 48 or less. Thus, our strategy will consist, in this part, of extracting the link of each publisher in a page, go to the next page and repeat the extracting, up to the last page. So, our code will have to discover the number of pages somehow. In this case, since the quantity of Publishers probably does not varies a lot, I inserted the number of pages for publishers in the code manually. So, again, for each page, the code will find out the number of Publishers on that page, extract the link for each of these publishers, and then move on to the next page, until there are no more pages to go through. It is not possible to assume that every page will always have 48 Publishers, because of the last page, so we will always need to find the number of Publishers in a page. The links to the Publishers will be stored in a Python list, so they can be accessed later.

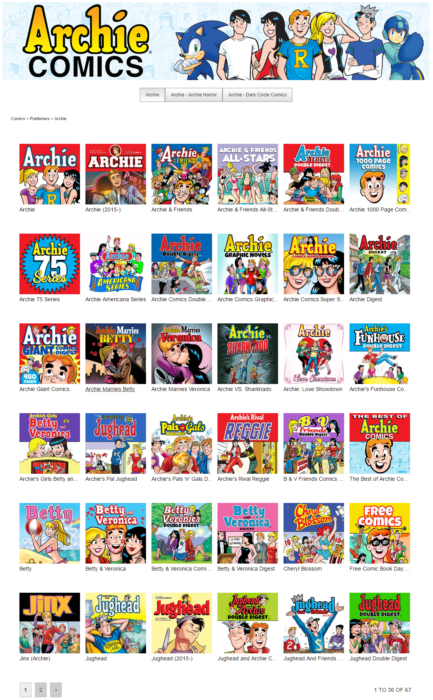

Now, let’s open the links to some Publishers to see the structure of the pages and how they differ from each other. For example, I opened Archie, Aspen and Marvel.

At first sight, starting by Archie, it looks like a pretty common ecommerce layout. Archie had two pages of comics series. These itens on the Publishers page may seem to be a comic, but they are not. They are series of comics. In the Publisher Aspen, we found our first special case, Bundles. Bundles are a group of many comics, where the buyer gets a discount for buying them all together. I considered that we would already have all comics in the series, so I chose to not consider bundles in my analysis, and as such, my scraping code would need to get only the links to the series, and not the bundles.

Marvel had a great quantity of series, with lots of pages that could be passed through the proper arrows or by an input box where you type the page you want to go. Marvel also have bundles.

So, to summarize, we would need to go to each Publisher link, discover the number of pages and then get the link to each series like we did to get the link to each Publisher. Get the number of series on a page, get the link for each series, go to the next page of series and repeat the process until there are no more series.

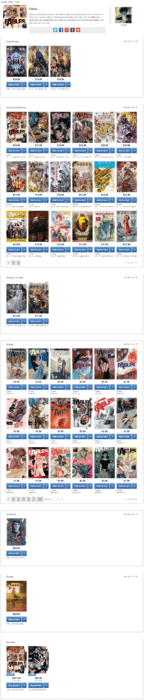

Now, let’s move on to the series. The series are divided in types or categories of comics, like Issues, Collected Editions and many others. To cover all the different types, I will open some big series, because they probably have many different types of comics. This step took some research, meaning that I had to open lots of series to find all th different cases. For this post, I’ll open some series that cover all the types. They are: Batman (1940-2011), Fables and Adrasté (this one because it is french, and in this case, the comics are named “Bandes Dessinées”. This three series cover the types of comics that I encountered and that we will have to extract.

Starting with the Batman one, we note a first type, “Recent Additions”. However, the comics in this category are also present in other categories, so they can be disregarded from our analysis. Bundles also appear in the categories, so we can also disregard them here. All the other categories will be considered in our analysis: Omnibuses, Collected Editions, Graphic Novels, Issues, Artbooks, Extras, One-Shots and Bandes Dessinées.

Each one of these types, like with the Publishers or Series, can have one or more pages. Although the most common case is to find multiple pages in the “Issues” type, I eventually found pages on other types in somes Series. That said, for each series, we have to go through each type of comic and do the same thing we did with Series and Publishers: get the number of pages for one category, extract the links for this page and category, go to the next page for this category and repeat the extraction, until there are no more pages for this category. Then, go to the next category and repeat the process, until there are no more categories. Then, we can go to the next series.

Now, we have to analyze a comic page, to understand what information we can get from it. Let’s open a comic from the series we were already looking at: Batman (1940-2011) #181.

As we can see, we have lots of information to get. Name, price, rating, quantity of ratings, publisher, writer, artist, genre, page count, release date, age classification, among others. If we go to the Sales page and open one of the comics in there, we will also note that there are some comics with discounted prices, and get this information might be interesting, even though it varies a lot with the moment you do the analysis.

So, to summarize everything:

- We start our analysis with the Publishers. Publishers have series of comics and each series of comics have different types of comics, like Issues, Collected Editions, and many more.

- The central idea of the scraping code is to extract the link of each Publisher, going through each page of the Browse by Publisher page, and extracting the links to each Publisher in the page, going to the next page and repeating the process until there are no more pages.

- With the links to the Publishers, we access each link and then use a similar process, discovering the number of Series in a Publisher and the number of pages of series, to extract the links of the series in each page of the Publisher. Finished the pages, we move on to the next Publisher, until there are no more Publishers.

- In the Series, the process is also similar, but we must go through all the pages for each category of comics, extracting the links. After we go through each page of a category, we move on to the next category, and when we finish with the categories, we move on to the next Series.

- The final step is to access the link to each comic and extract the desired informations.

The summarized explanation may seem a little confusing, but when we pass it to the code, everything will be clear :)

With this, we finish our analysis of the Comixology website. In the next post, I’ll talk about the scraping code and explain it.

Stay tuned! :)