Probability and some applications in Python

April 19, 2016

Introduction

Following the last post about Statistics, this post will make an introduction to the world of Probability, presenting some of the basic concepts and subjects and how we can calculate some of them using Python.

Probability is a numeric measure that represents the chance that a certain event happen, and the studies to calculate this measure. What is the chance that I throw a dice and get a 6? What is the chance that I throw two coins and get heads on both. This question, and many others, are answered by Probability.

The basic

Dependent and Independent Events

The first important concept of Probability that we will talk about is dependent and independent events.

Independent events are events where knowing the result of one of them does not give any information about the probability of the other happening. Rolling one dice will not tell us anything about rolling a second one.

Analogously, dependent events are events where the result of one of them gives information about the result of the other. In this case, rolling one dice gives us information if what we want to know is, for example, the chance to roll a 6 in two dices.

For independent events, the chance of two events happening is the probability of one multiplied by the probability of the other. Knowing this, the probability of rolling any of the numbers on one dice is 1/6, and the probability of rolling two consecutive 6 is calculated like this:

Being P(A) the probability that event A happens, P(B) the probability that event B happens and P(A,B) the probability that both events happen:

P(A,B) = P(A) × P(B)

probability_of_6 = 1/6

# Probability of rolling two six in two dices

print(probability_of_6 * probability_of_6)

0.027777777777777776This is the probability of two dices rolling two six consecutively. Let’s use the Fraction function from the fractions package to show that this number is equivalent to 1/36, which is the exact result of 1/6 multiplied by 1/6:

# Using Fraction to return the value in fraction format

from fractions import Fraction

print(Fraction(probability_of_6 * probability_of_6).limit_denominator())

1/36Complementary Event

Complementary events is a simple concept. A complementary event A is an event that includes all outcomes that are not in the results for event A. Again, using a dice roll for our example, if an event A indicates the probability of rolling a number lesser than or equal to 2, which would be 2/6, its complementary event would be the probability of rolling a number larger than 2, or 4/6. The calculation of the probability of a complementary event is simple. All you need to do is subtract from 1 the probability of event A happening. Since this is an extremely simple example, I feel there is no need to show it as code.

This concept can be useful in cases where it is easier to calculate the probability of the complementary event than the probability of the event itself. Let’s imagine, for example, that we want to know the probability of rolling two different numbers in a dice. For this case, there are a lot of possible results. However, if we think about the complementary event, it is the probability of getting two equal numbers, and for this event, the results are resumed to (1,1), (2,2), (3,3), (4,4), (5,5) e (6,6). With that information, we can easily calculate the probability of the complementary event, and consequently the probability of the desired event - 1 - 6/36 = 30/36.

Mutually Exclusive Events

Mutually exclusive events occur when, between two events, only one can occur. When you throw a coin, you can only get heads or tails. You can’t have both simultaneously. In a dice, the rolled number can only be even or odd. No number on the dice meet both conditions at the same time.

For two mutually exclusive events A and B, P(A,B), the probability that both occurs, is equal to zero. However, we can calculate the probability of P(A or B), which, for this type of event is equal to P(A) + P(B). Considering the previous dice example of even and odd numbers, P(A or B) will be 1, since any number will be even or odd. For another example, in a deck of cards, let’s consider the probability that a card is a Jack. This probability, P(A), is equal to 4/52, or 1/13, since we have 4 Jacks in a deck of 52 cards. The probability of a that a card is an Ace is the same. P(A,B), or the probability that a card is both a Jack and an Ace, is zero, since a card cannot be both. But P(A or B) is equal to P(A), or the probability that a card is a Jack, plus P(B), probability that a card is an ace. That results in 1/13 + 1/13 = 2/13. Again, since this a basic math subject, I don’t believe there is a need to show it in code.

Conditional Probability

For dependent events, the calculation of probabilities change. Let’s establish the following:

- P(A|B) -> Conditional Probability of A given B, or, in other words, the probability that event A happens, given that we know that event B happened.

- P(A,B) -> As we already saw, probability that both events happen.

- P(A) and P(B) -> Also, as we already saw, probability that each event happens

For dependent events, the calculation is as following:

P(A|B) = P(A,B)/P(B)

And sometimes, we move P(B) to the other side of the equation, and it will be like that:

P(A,B) = P(A|B) × P(B)

Let’s see an example and some code. We will consider a dice. Event A will be rolling an odd number in the dice, and event B will be rolling a 5 or a 6. Let’s calculate the probability of A given B:

P(A|B) = ??

P(A) = 3/6

P(B) = 2/6

P(A,B) = 2/6 × 3/6 = 0,16666 ou 16,66%, or even 1/6 - This result is equivalent to our only possibility, which is number 5.

P(A|B) = (1/6)/(2/6)

Let’s check the code to get to the final answer:

# Probability of event A: rolled number in dice is odd

probability_odd = 3/6

# Probability of event B: rolled number in dice is a 5 or 6

probability_5_or_6 = 2/6

# Probability of both events

probability_both = probability_odd * probability_5_or_6

print(probability_both)

0.16666666666666666

print(Fraction(probability_both).limit_denominator())

1/6

# Probability of A given B is equal to P(A,B) / P(B)

probability_a_given_b = probability_both / probability_5_or_6

print(probability_a_given_b)

0.5

print(Fraction(probability_a_given_b).limit_denominator())

1/2Let’s interpret some of these results. First, in the calculation of probability of both events, the result is 1/6. Let’s analyze the events in separate. For event A, rolling an odd number, there are three numbers that meet the condition; 1, 3 and 5. For event B, rolling a 5 or a 6, there are two numbers that meet the condition; obviously, 5 and 6. Analyzing it all together, the only number that meets both conditions is 5. Thus, 1/6, the probability of rolling a 5 in the dice.

Now, let’s analyze the probability of A given B. Considering that B happened, we know that the rolled number is 5 or 6. The number between these two that meets the condition of event A is 5. In the universe of possibilities of B, we have one result that meets the condition of event A. So, we have 1/2, half of the possibilities. This result also confirms that the events are independent, because the calculation of P(A,B) leads us to a conclusion that confers with the reality of each event.

Let’s calculate and quickly analyze the probability P(B|A):

probability_b_given_a = probability_both / probability_odd

print(probability_b_given_a)

0.3333333333333333

print(Fraction(probability_b_given_a).limit_denominator())

1/3In this case, we know that event A, rolling an odd number, already happened. So, we have a 1, 3 or 5. Between these, the only one that meets the condition of event B, rolling a 5 or 6, is 5 itself. So, between 3 possible results of A, only one meets the condition of event B, giving us a probability for B given A of 1/3.

Bayes Theorem

Bayes Theorem is a very important concept in Probability and one of the really important tools that a Data Scientist should learn. It is used in many real applications, like spam email filtering, for example. Bayes Theorem is a way to calculate conditional probabilities in a reverse way.

Let’s consider the classic example to explain the Theorem. We have a certain disease A and a test T, used to detect this disease. The test is not 100% precise, indicating a person as sick when they don’t have the disease (false positives) and not detecting the disease in people that have it (false negatives). Let’s consider that 1% of the population has the disease, and consequently, 99% do not have it. The T test detects the disease in a sick person in 90% of the cases, and does not detect the disease in sick persons in 10% of the cases. In a not sick person, the test detects the disease (wrongly) in 5% of the cases, not detecting it (correctly) in 95% of the cases. Let’s summarize it like this:

| Sick Person (1%) | Not Sick Person (99%) | |

|---|---|---|

| Positive Test | 90% | 5% |

| Negative Test | 10% | 95% |

Now, let’s suppose that you took the test and the result is positive. How we interpret this result. Obviously, there is a chance that you really have the disease and a chance that you don’t. Given a random person, the chance that you have the disease and the test detects it is equal to the chance that you have the disease (1%) multiplied by the chance that the test correctly detects it 90%. Analogously, the chance that you don’t have the disease but the test detects it anyway (false positive) is equal to the chance that you don’t have the disease (99%) multiplied by the chance that the test detects it incorrectly. Repeating this for all the results, we have the following table:

| Sick Person (1%) | Not Sick Person (99%) | |

|---|---|---|

| Positive Test | Prob Sick and Pos Test: 1% × 90% = 0,9% | Prob Not Sick and Pos Test: 99% × 5% = 4,95% |

| Negative Test | Prob Sick and Neg Test: 1% × 10% = 0,1% | Prob Not Sick and Neg Test: 99% × 95% = 94,05% |

This are the probabilities for each case, given a random person. Note that, if we sum up all the probabilities, we end up with 1, or 100%. But now, we have one doubt. If our test is positive, what is really the chance that we have the disease? Considering the basic calculation of probabilities, we have that the chance of having the disease is equal to the chance of the desired event divided by the entire universe of possibilities. For this case, the probability is 0.9%. The entire universe of possibilities is the sum of all positive tests probabilities, being them 0.9% + 4.95%, resulting in 5.85%. Doing the math, we reach the conclusion that given a positive test, our chance of having the disease is equal to 0.9%/5.85%, equal to 0.1538, or 15.38%. This is lower than expected when we look at the original probabilities and the supposed precision of the test.

Considering the following:

P(D|A) = Probability of having the disease, given a positive test</i> (this is the probability that we want to know)

P(D) = Probability that a person have the disease = 1%

P(A|D) = Probability that the test is positive in a person with the disease = 90%

P(A|¬D) = Probability that the test is positive in a person that does not have the disease = 4,95%

P(¬D) = Probabiliy that a person does not have the disease = 99%

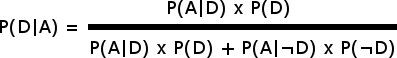

Bayes Theorem says that:

Let’s see some code to make these calculations and confirm the result of 15.38% that we just got:

# The probability that some person have the disease A is 1%

# consequently, the probability of someone not having it is 99%

prob_disease = 0.01

prob_not_disease = 1 - prob_disease

# A T test to detect the disease is not 100% precise, detecting the disease

# in a person that is not sick and not detecting it in sick people

# The test detects the disease in sick people in 90% of the cases

# and it does not detect it on sick people in 10% of the cases

prob_pos_test_and_disease = 0.9

prob_neg_test_and_disease = 0.1

# The test detects the disease in a not sick person in 5% of the cases

# and it does not detect the disease in a person that is not sick in 95% of the cases

prob_pos_test_and_not_disease = 0.05

prob_neg_test_and_not_disease = 0.95

# True positive: chance that one person have the disease and the test result is positive

prob_true_positive = prob_disease * prob_pos_test_and_disease

# True negative: chance that one person does not have the disease and test result is negative

prob_true_negative = prob_not_disease * prob_neg_test_and_not_disease

# False positive: chance that one person does not have the disease and test result is positive

prob_false_positive = prob_not_disease * prob_pos_test_and_not_disease

# False negative: chance that one person have the disease and test result is negative

prob_false_negative = prob_disease * prob_neg_test_and_disease

# I took the test and the result is positive. What is the chance that i have the disease?

# Bayes Theorem: P(D|A) = (P(A|D) * P(D)) / (P(A|D) * P(D) + P(A|¬D) * P(¬D))

# P(D|A) = Probability of disease given a positive test. This is what we want to calculate

# P(A|D) = Probability of positive test given that you have the disease -> 90%

# P(D) = Probability that one person have the disease -> 1%

# P(A|¬D) = Probabiliy of a positive test given that you are not sick -> 5%

# P(¬D) = Probability that one person does not have the disease -> 99%

# Now we will simplify the variables so that the equation does not gets too extense

P_A_D = prob_pos_test_and_disease

P_D = prob_disease

P_A_ND = prob_pos_test_and_not_disease

P_ND = prob_not_disease

prob_disease_given_positive = (P_A_D * P_D) / (P_A_D * P_D + P_A_ND * P_ND)

print(prob_disease_given_positive)

0.15384615384615385And just like that, we can calculate conditional probabilities in a reverse way, like we talked before. Bayes Theorem is useful to calculate the real probabilities in these cases, because the precision of the test does not tell the whole story. In the case o the Spam Filter, the probabilities are changed to the probability that a spam email determined words and the probability that an email that is not spam have the same words. For a more complete article, check in Wikipedia, clicking here for the Wikipedia article.

For now, that is it. I believe that these are the most basic and important parts of Probability. We are still missing distributions and Central Limit Theorem, but these will be talked about on another post.

Regards!